Overview

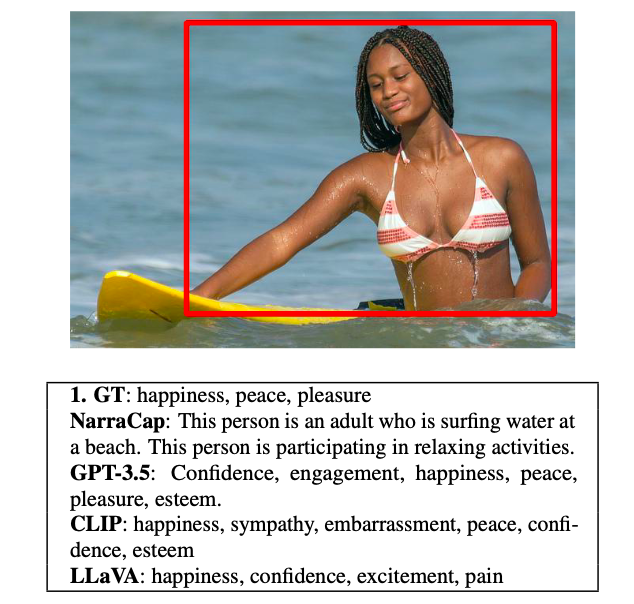

The visual emotional theory of mind problem is an image emotion recognition task, specifically asking "How does the person in the bounding box feel?" Facial expressions, body pose, contextual understanding and implicit commonsense knowledge all contribute to the difficulty of the task, making this type of emotion estimation in context currently one of the hardest problems in affective computing. The goal of this work is to evaluate the emotional knowledge embedded in recent large vision language models (CLIP, LLaVA) and large language models (GPT-3.5, MPT, Falcon, Baichuan) on the Emotions in Context (EMOTIC) dataset. In order to evaluate a purely text-based language model on images, we construct "narrative captions" relevant to emotion perception, using a set of 872 physical social signal descriptions related to 26 emotional categories, along with 224 labels for emotionally salient environmental contexts, sourced from writer's guides for character expressions and settings. We evaluate the use of the resulting captions in an image-to-language-to-emotion task. Experiments using zero-shot vision-language models on EMOTIC show that a considerable gap remains in the emotional theory of mind task compared to prior work trained on the dataset, and that captioning with physical signals and environment provides a better basis for emotion recognition than captions based only on activity. Also, based on our findings, we propose that combining "fast" and "slow" reasoning is a promising way forward to improve emotion recognition systems.

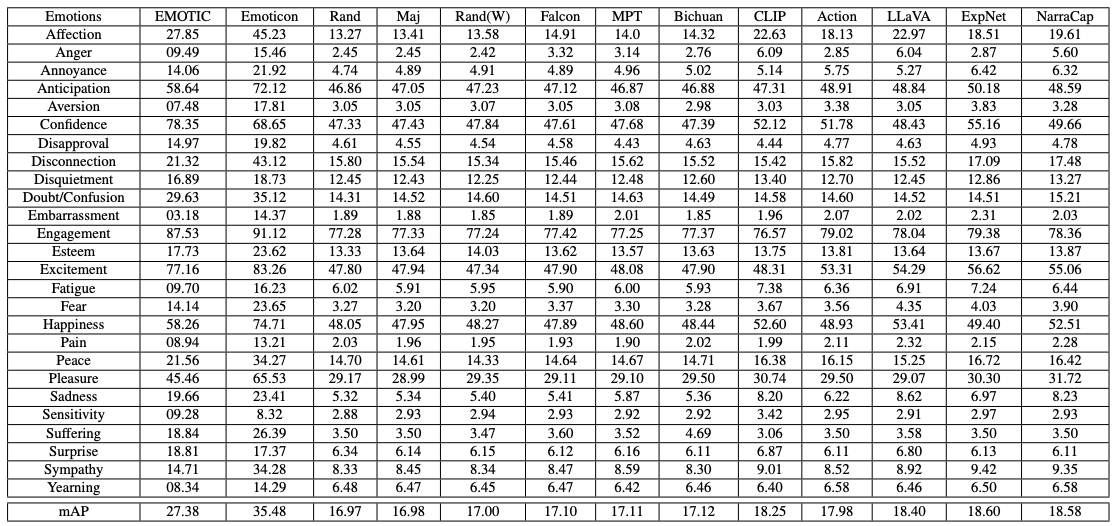

Results of different methods for 26 different categories from EMOTIC

Download

Below one can download the generated captions.

coming soon!